“Content tagging” is not the sexiest phrase in the Learning & Development (L&D) space.

Nobody stands up in a meeting and asks, “When can we start content tagging already? I’m soooooooo excited!”

But proper content tagging is something your LXP partner should excel in, not simply mention as an afterthought. If not, it may be time to explore other options.

Unfortunately, most learning content is poorly tagged — either too broadly or not at all. This equates to inaccessible learning, content that’s hard to find in search, an uneducated, non-data-driven guess for recommendation engines, and often just flat out irrelevant results.

If your audience complains about not being able to find the resources they need, or your learning platform is throwing up bad recommendations and search results, the problem is likely to be a result of poorly tagged content.

Investing in learning at your organisation is not — and should not be — something taken lightly.

There are many things to consider when choosing a learning partner. If you are doing it right, there is a substantial (yet very justifiable) cost incurred to help your employees learn and develop the necessary skills to grow within your organisation.And learning will not happen without efficient, accurate, timely, helpful content.

This is where tagging comes into play.

Why proper content tagging is essential

Let’s be honest: We have a content overload problem.

While it’s hard to pinpoint the exact number, one company estimates 1.145 trillion MB of data are created per day.

And “too much content” is arguably the #1 problem with learning content.

Good news: Proper content tagging can help overcome (learning) content overload.

One quick (simple?) solution for learners is to “nuance the search term.” So, for example, instead of searching “leadership,” you could add more specifications, say “transformative leadership” or “authentic leadership.”

But that option puts a lot of onus on the learner.

Even if the learner searches with that level of specificity, the content must:

- include the phrase “authentic leadership,” OR

- include each “authentic” and “leadership” within the article (not a guarantee to be relevant), OR

- be tagged appropriately

Proper content tagging is the answer, but effectively tagging learning content is way easier said than done.

Here are a few best practices to think about when tagging learning content.

Ensure accurate skills definitions FIRST

Understatement: Skills are a pretty hot topic in our industry of late.

The data prove it out: Reskilling and upskilling is the #1 priority for 93% of businesses in 2021.

It’s crucial that skills are well-defined and well-thought-out as part of the content learning tagging process.

When thinking about skills, consider ones that:

- Are short and sweet: Keep definitions to two sentences, max.

- Use examples: Three assets that best explain the skill. Of course, an example of what the skill is not is helpful too.

- Use real-world language: Avoid fancy language.

- Have context: Why are you tagging these skills? Tags are part of the learning and knowledge management system and need to interact with it.

Effective, skills-centered curation is the answer.

This approach ensures learning will be practical and valuable. Your LXP must curate content while considering the nuance of specific skills and job roles specific to your organisation. This, in turn, will mean the content will be valuable enough to keep learners coming back.

Make sure your process is efficient.

Process matters.

Spend upfront time and effort to ensure your process is solid and well-thought-out.

It doesn’t matter if you use a learning platform (LXP, LMS), Content Management System (CMS), spreadsheet, or purpose-built tool to tag. Whatever you choose, err on the side of what’s most efficient for curators.

Allow them to collaborate effectively.

Take the time to think through how your tagging process will work. For example, if manual tagging is your only option, it’s much quicker to tag batches of content to a single skill than trying to think through which X number of skills best apply to a given asset.

Beware of native tags!

Many content providers have libraries prefilled with their own metadata.

In and of itself, this is not a bad thing. Pre-filled tags can make the process go faster. However, much of this metadata is often surprisingly partial and inconsistent.

It tends to reflect the history of the content provider’s library development over the years instead of your capability priorities — a helpful input but not a reliable catalogue.

And certainly not a customised solution for your specific content learning needs.

Incorporate extra sets of eyes

For the content tagging that’s most essential to your organisation, make sure curators' work is marked independently.

This allows for a second (or third) set of eyes.

At Filtered, when configuring our tagging models, we employ a process analogous to “code review” in software development — all work gets checked.

But more than just double/triple checking work and limiting errors, this collaboration is an opportunity for learning and shared understanding.

Understand the size of the issue

How much content do you have that needs tagging?

Before diving into a tagging process, it’s valuable to review some high-level summary statistics on the content you want to tag.

- How good are the existing title and descriptions?

- What percentage of the content is already tagged?

- Of the pre-tagged content, how accurate is it?

It may turn out the “big tagging problem” you thought you had was not that large after all.

Use data to measure how well you’re tagging

If you can gather feedback on tagging quality (e.g., user-rated relevance in your LXP), this can guide where to invest effort and help you improve your curation skills and processes.

Measure how well you’re tagging.

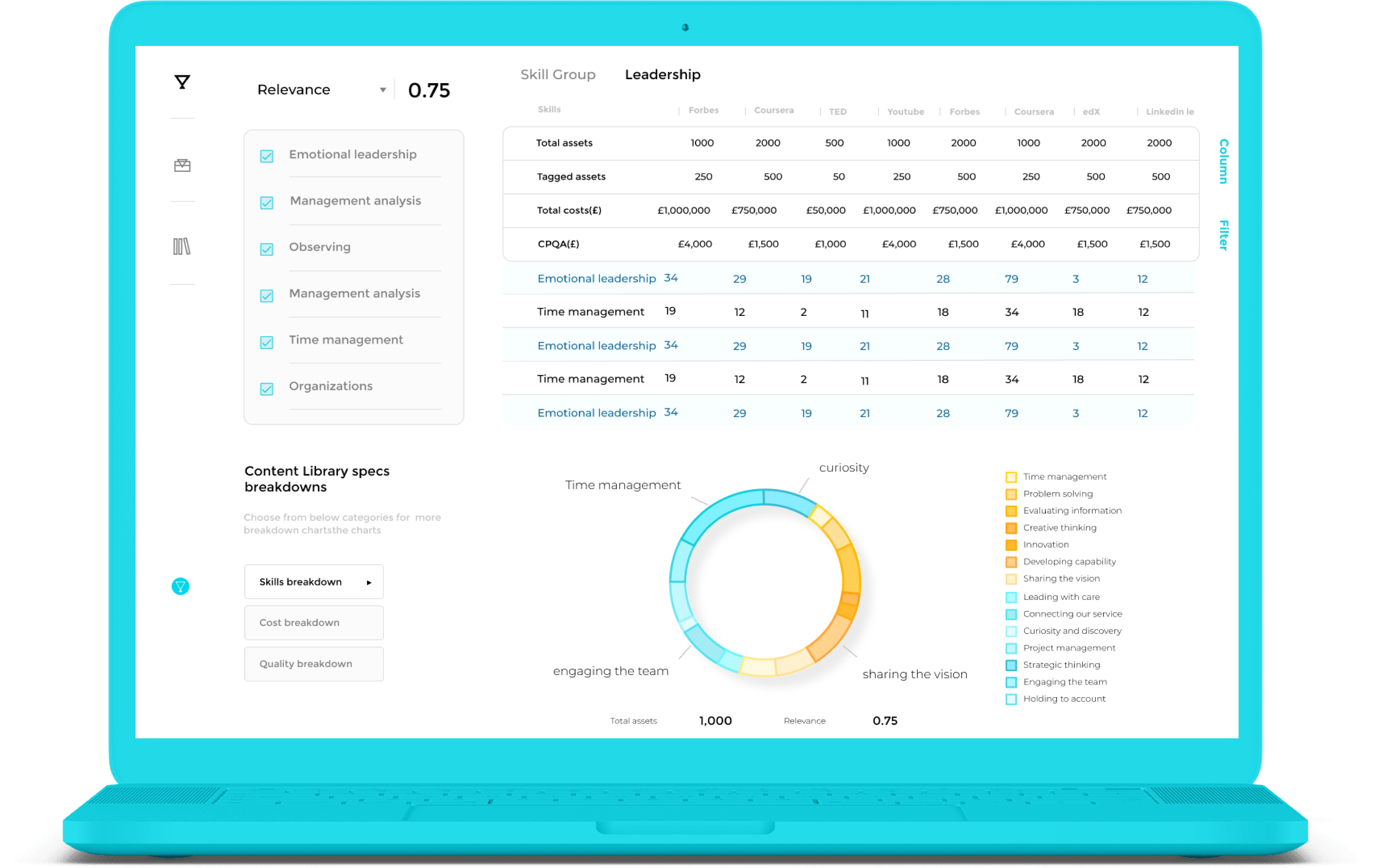

Generate data on the usefulness of your content libraries. Measure each library’s content provision against the company’s skills frameworks. Find out where skills gaps are and which libraries best fit skills needs.

The customer-side formula we apply when optimising automated tagging models is as follows. We ask our customers to:

- Select a random sample of the tagged assets (around 50 max)

- Share the sample with a human curator who will review and mark those tagging decisions they agree with

- Calculate the percentage of the sample where they agree with the tag applied

This provides a baseline for how aligned the tagging is and enables us to tweak and adjust as necessary.

Content Intelligence solves the time-consuming, often inaccurate manual tagging process

Much of what we discussed above are best practices to consider when manually tagging your learning content.

Manual tagging is a lot of (unnecessary) work!

Imagine a world tagging learning content could be done automatically ... in a fraction of the time.

Filtered’s Content Intelligence technology is the answer.

You need to determine which tags are necessary (skills must support the business need/goals) AND implement a useful curation process (manual, automated, or mixed), one that judges the purpose and value of each piece of learning according to its content, tag(s), and skills framework.

At Filtered, we call this Content Intelligence — helping your organisation make data-driven learning content procurement and curation decisions.

Content Intelligence accurately and efficiency tags learning content by turning the text of content itself into data. Our AI “reads” through your learning content assets and smartly tags it all according to the predefined skills – the handpicked skills chosen by our customers and advisors.

Bonus: The tagged content is also scored and ranked according to how well it fits each skill.

The process far beats the traditional answer — manual tagging.

Pair solid content tagging with well-thought-out content curation, and you have a winning formula —one that favours personalising content for each learner, running engagement campaigns through integrations, building pathways, and catering for social learning.

Content Intelligence has helped some of the biggest, highest-performing companies in the world save hundreds of thousands of dollars and plug gaps in the content they offer.

Content Intelligence turns skill definitions into lists of accurately tagged content that reads the content itself — not just the title.

On average, our customers reduce their content spend by 30% and produce a library of impeccably tagged content at 10x the speed of human curators.

Are you ready for a more robust, accurate, faster, less expensive tagging solution? Get in touch to understand how we can help.