Billions are spent each year on learning content. Yet a Google search on the topic of running a ‘learning content RFP’ yields virtually nothing that’s relevant. A rare piece of forward-thinking content-focused content in this area was this 2x2 model proposed by RedThread Research. But it’s the exception that proves the rule: it seems almost no one wants to talk about selecting content (except in CLO Coffee Club). And maybe that’s precisely because of how much is being spent. There’s a lot at stake.

However, if we don’t talk about how we buy the right learning content, we’ll never get the best return on our L&D spend – we'll just continue buying the big brands. This article will redress that balance and explain how to run a learning content RFP to help people with responsibility for buying pre-built learning content make better decisions. It’s also a call to buyers to simplify and add rigour to their content RFP process as they consider what exactly the ‘right content’ is.

Contents

1. Problem statement

You’re not ready to start your RFP process if you aren’t clear about what problem the content you might purchase is intended to solve.

Ask yourself what skills your organisation needs to build for the future, what skills gaps you are trying to close, and what performance outcomes matter to your organisation. This will drive your thinking about the topics you should be looking out for. The link may not be obvious; it may require some reflection and consultation. For example, if it’s important to your organisation to improve performance conversations, this might be best supported by content focused on having difficult conversations, giving effective performance focused feedback or building trust in teams.

Arm yourself with data. What are people searching for now in your organisation? What are they not finding? What are they serving themselves with from the web or paying for out of their own pocket from providers? Some vendors can give you better data on what skills your people need than you might have available internally! And if they can’t, go straight to your colleagues and ask them. There’s always a path to some useful data, though that path is often not immediately clear.

Think also about context. What do you already have and what content gaps are you trying to fill? Will certain content types be more useful to people e.g. skills practice content? Will some be more practically usable e.g. podcasts, videos, articles?

And ask yourself - and keep asking yourself - are you sure content is the answer? The value is most often not in the content itself but in the onward discussion, application and ideation amongst colleagues, so be clear about why you might be buying content as part of a wider learning strategy, not as the end in itself.

2. Internal team

It’s important not to try to do this alone, even (especially) if you are an L&D team of one. Try to assemble a team that represents several important perspectives e.g. from different organisational divisions, and include people from procurement and IT right from the outset. You need to become partners in this shared task.

Think carefully how you will involve the intended end-users of the content from the get-go too. Aim to ground all decisions in practical end-user reality. You can’t speak to everyone, so focus on getting a short, sharp, representative view of whether they will gain value from Library X, Y or Z.

3. Learning Content RFP scorecard criteria

As a team, you’ll need to develop a decision-making rubric to use throughout the process, to help filter down from all vendors to a long list, a short list, and a final decision. Thinking about this upfront also removes some of the bias that can creep into a process once you start talking to persuasive, influential vendors.

Your scorecard will be unique to your company, your industry, your situation. But there are some factors that almost every organisation thinking about buying learning content should consider. Here are a few scorecard criteria.

About the provider

Experience & references

- What similar projects have they worked on?

- What’s not similar but transferable?

- What might you learn from some of these that can be brought to bear on your business needs?

Price

- What’s the overall price versus your budget?

- How does the price compare on a user or activated user basis?

- What downstream services, attention and care are included?

- Are there hidden costs for add-ons, content refreshes, reporting, extra licenses, etc?

Cheaper is not always better. Lean on your procurement team (if you have one) to help you find any devil in the details. Get clear on pricing early on and get vendor pricing ballparks soon after. Assuming you have a finite budget, this will helpfully filter out vendors that you just can’t afford. It will also – if you notify them that you are ruling them out on price – encourage the expensive vendors to reconsider their pricing! Costs can range from four (£1k+) to seven figures (£1m+) for learning content, so qualify in and out early and avoid wasted time.

Finally, be absolutely clear that you’re comparing apples with apples and in particular the issue of whether the price is for blanket coverage of every employee in your organisation or whether it’s for activated licenses only. With an average utilisation rate of 25%, there can be a big difference here.

Lines of responsibility

- Will the vendor just provide access to the content and be done?

- Will there be Subject Matter Experts on hand? What will they do e.g. will they help you curate?

- What about content refreshes?

- Who will be responsible for Customer Success? Do they impress you?

Vendor-buyer chemistry

- How well does your team gel with the vendor?

While just moderately important through the RFP process, this relationship will become crucial (especially to your professional life) over a multi-year engagement.

Ease of integration

- How easy is it to import that content and get it working in your internal systems?

All vendors will say it’s easy! So ask them to specify exactly what will be required in fine detail and if possible, set up a trial run to actually get some of that content into your systems to test. Lean on the IT people in the internal team you assemble (as above) when vendors start talking technicalities and you need some support.

Success and enablement

- Does the vendor understand what you mean by success?

- What are the metrics? If a vendor is not interested in this - walk away!

- How do they expect to help you more than other vendors?

Communications

- How frequently and through what medium will the vendor be in touch?

- What reporting will you receive?

- What access to analytics will you be granted?

Weekly is probably too often for content but perhaps not at launch. Monthly? Quarterly? What about reporting? How detailed is this? Have you seen a sample output report? If not, ask for one.

Escalation process

- How do issues get escalated?

- What support team will be present?

- How quickly can you expect to receive a response?

About the content

Fuller discussion in our related piece on Right content. Here’s an abridged version...

Relevance

- How well aligned is the offering to the high-value skills, topics, behaviours and values that you have prioritised?

Filtered has a lot to say on this matter, as it happens.

Quality

- What data can the vendor provide on quality e.g. completion/usefulness/relevance?

This is a thorny but important issue. It’s impossible to get data on this that you can apply across all your vendors. So perhaps you ask them the question and rate how convincing their answer is and overlay that with any data they do provide. And ideally, gather views from a focus group of end-users.

Impact

- What case studies can the provider show to evidence impact and performance?

To what extent will the content satisfy the needs of your intended audience who will come with many varied and nuanced learning needs? And how quickly will people be able to understand if their needs will be met? There’s very little patience in the world these days, we are all busy and when we want a learning itch scratched, if we don’t get what we need fast, we move on, possibly never to return. Impact ties directly to engagement because of this.

Data and metadata

- What data and metadata will be provided on the library content?

People don’t just need good, relevant, impactful content...they need to find it. AKA discoverability. Well-tagged libraries enable this.

Future-proofing

- What plans does the vendor have to develop their offering over time?

- Can they show you a roadmap?

The priorities of your organisation, the vendor and the world will change over the months and years ahead. How well aligned do you think you will be in 2025?

Languages

- How well do the library languages match your requirements?

If your organisation has multiple language requirements, that complicates things. No provider has all their content in all languages. And each provider that does offer multiple languages will do so in a different set of languages, and often it’s different languages for different (types of) content. This is almost another topic in its own right - make sure it’s treated as such and given due time and attention in the process, if it’s important to your situation.

Certification

- What external accreditation or credential does the library have?

- Does it meet technical or compliance requirements for your industry?

Does it matter if the content comes with some external accreditation or credential? For some this may be an important consideration, for others it is irrelevant (and may add significant cost and complexity). Think this through so you are clear on your position when you start to speak to vendors.

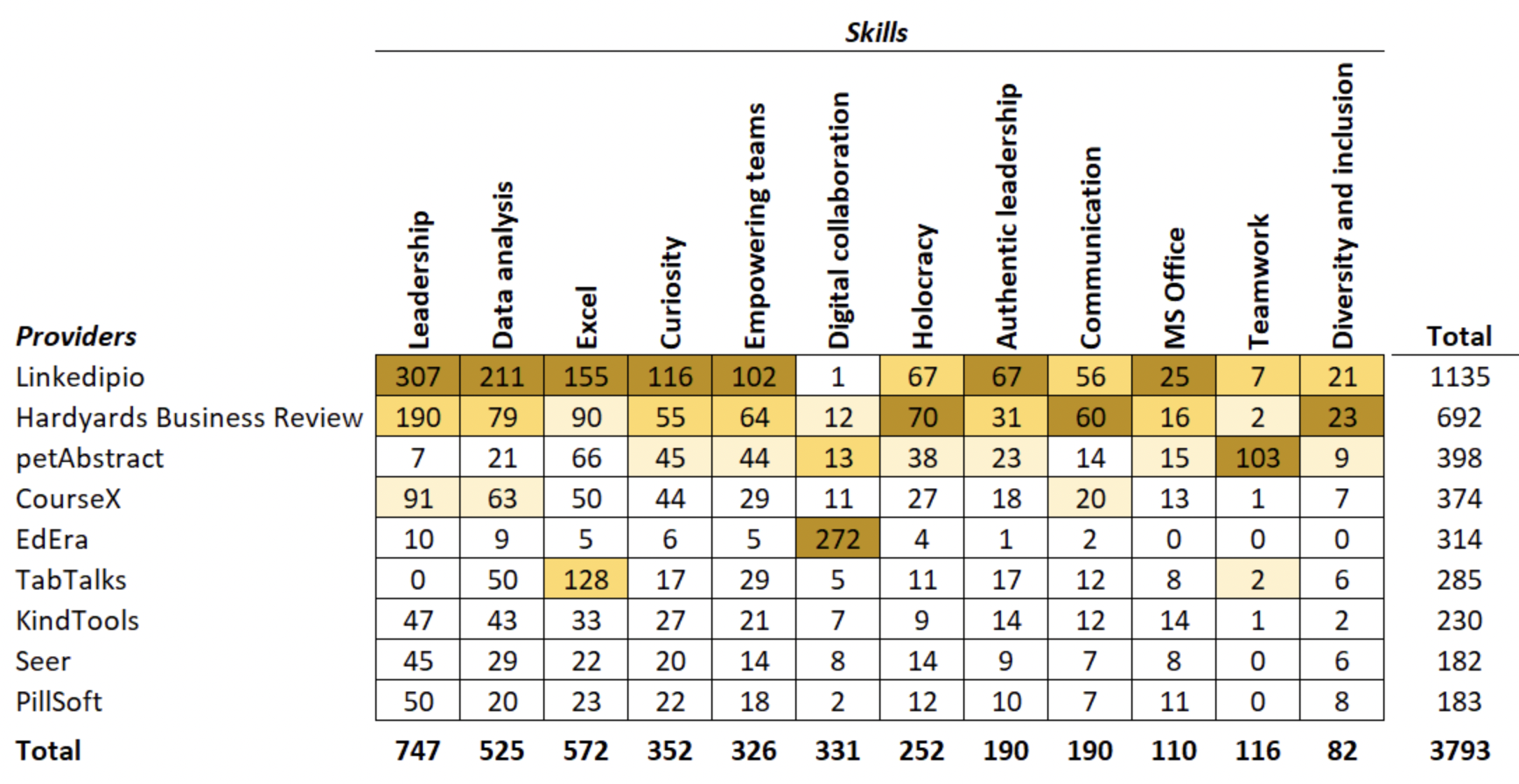

Cohesion and coverage

- How well does the new library addition complement your existing provision?

You need to make your collective choices work and give you the fullest coverage (and least overlap). You’re not (just) marking the individual attributes of each provider. You want to get to a heatmap of skills x provider so you can pick and mix the combination of vendors that gives you and your learners what they will need based on your skills framework. Here’s an example:

This can be an arduous process, so we often automate it almost entirely for our clients using Content Intelligence.

How to score a learning content RFP

It’s hard to compare and calibrate all of the above criteria. But start by choosing an overall bucket size, a number. Let’s call this 100. Then think about the relative importance of each of the factors you want to take into account and assign each a proportion. This will feel pretty arbitrary at first. But then let some of the internal team referred to above (SMEs, IT, business leads, end-users) take a look at the scorecard and have their say. This will help iterate and also increase buy-in at your organisation. You can also weight the scores to factor in whether it’s a must-have, should-have, could-have or won’t have.

As you go through this iterative scorecard creation process, ask yourself repeatedly what problem you are trying to solve. Continue to check whether and to what extent content is really the answer.

When Filtered supports clients in library procurement decisions, we work with them to turn these priorities into structured criteria that can be assessed using robust metrics and visualisations based on the asset data. In this example, we encapsulate the client’s focus on a few core organisational capabilities in a simple metric and rich visualisations:

Content pilots, trials and proof of concepts

How about doing two or three pilots, trials, experiments, proofs of concepts? Is that a shortcut to the circuitousness of running a RFP? It might be a good idea to run an experiment. But who to experiment with? You will still need much of the above and below to decide this robustly and fairly. Even a free pilot is not really free if you consider the fully loaded costs of your time, your team’s time, end-user evaluation, etc.

However, a pilot or series of pilots might be the right conclusion to draw at the end of the RFP process. And if that’s the case, state it clearly in your...

4. Learning content RFP document

You’ve done the hard preparatory work. But if it’s not turned into a good RFP document that can be used externally, that hard work won’t necessarily translate into excellent results.

A good RFP is important to level the playing field and provide the basis for a fair and robust vendor comparison. It’s also a public, outward-facing representation of your company – reputation is at stake here. And there’s so much more our industry needs to do to make these documents more effective in drawing out the value that different vendors offer.

The exact structure and format of the document itself will vary by use-case, company and industry, but a good learning content RFP document contents should usually include:

- Exec summary

- Project overview and company background

- Deliverable timelines

- Key considerations and potential roadblocks

- Budget

- Success criteria

- Must-haves e.g. tech specifications, ethics including supply chain, ESG, infosec. etc.

- Decision-making process and marking scheme

- Admin: confidentiality, supporting documentation, submission instructions,

The exec summary is not just administrative padding, it’s important. Many of the influential readers of your RFP will stop at the end of the exec summary. It thus needs to convey what your RFP is really about so that they can qualify themselves in or out as appropriate. The more they do that, the easier your job and the higher the quality of your eventual choice.

5. Content library longlist

Conducting a market scan to create your library longlist is easy. Conducting a market scan well is not.

For a start, how many is long? It’s futile to give a precise number; everyone’s context and need is different. But a longlist of more than 50 is going to take a long time to put together and leave a lot of work to cut down to a shortlist. And a long list of less than 10 is likely to leave a lot of decent providers without a chance.

There are lists of ‘top’ vendors and review sites that you can and should take a look at. But beware that none of these are comprehensive and many of them have inherent bias or even paid promotion. This approach alone is inadvisable, brings only the advantages of theft over honest toil, and multiple disadvantages too.

An alternative and better, in our view, approach is to consider some of the important categories of content providers. That might look something like this:

List of learning content providers by type

- General-purpose

- Format-focused

- Marketplaces

- Aggregators

- MOOCs

- Subject specialists

- Web crawlers

- The web itself

- From reputable organisations like McKinsey, to the long tail of smaller creators of mixed quality.

You might choose to divide up the market differently. But however you see it, this method speeds up the process: it will rule some categories in so you can devote more time there; it will rule some categories out so you can save time there; it will make your search more specific and return better, more useful results.

Note that the above are only examples of vendors - many of them very well known - to illustrate the point. There are many, many other excellent options out there - some of them just starting out and doing outstanding, innovative work, so don’t be blinded by the big names or indeed the list above. In fact there are aggregators of suppliers that might help you such as venndorly.com and simplybrilliance.com.

Once you’ve arrived at your long list and you’re happy with the RFP (try to coincide these two events), send the latter to the former and wait for the responses to come in.

6. Content library shortlist

What’s better than a longlist? A shortlist!

Apply your scorecard criteria for each vendor that responds to your RFP, according to your scorecard. Document every decision you make. This is in order to be fair and to be able to be transparent and offer a paper trail for the whole process. So record answers into a structured template, along with the scores and the scoring formula.

Do it separately and then come together as a team to share and moderate your scores and agree on a final position.

The best-scoring vendors will constitute your short list. How many you include will depend on the quality of the responses, how much time you have and how cohesive they are as a group. But we think a good content library shortlist has around 3-10 vendors.

7. Final evaluation and decision(s)

You’ll now need to more finely tune your scorecard evaluation with interviews with the vendors on your shortlist.

Meet with each and hold a structured interview - treat each vendor as similarly as possible. This is like a job interview; some of the people you meet in this process may become as important to your professional life as your close colleagues. It’s worth the investment of time at this point. You’ll likely need at least 90 minutes with each vendor.

Consider cohesion and coverage more seriously now (with a smaller number of players this becomes mathematically/conceptually possible!) It’s now not so much a game of who’s the best but which combination will serve you best.

The conversations can and should now get into the weeds. Bring up specific issues with specific content you’ve reviewed. Ask in detail about their content roadmap and how confident they are that it will be delivered. Ask them about which other vendors they feel would complement their own coverage and why they think that.

In the end, it’s likely that you will have a small number of very appealing options. It’s often the case that there’s a frontrunner for your main library (that provides 80%+ of the material your people will need) and then a small handful of more niche providers that serve particular subject areas or learning preferences (format, length, style, etc).

Finally, hold a conclusive meeting with your team to discuss the results of the process then, hopefully with a consensus, it’s time to pull the trigger! Secure sign-off, break out the budget and let the procurement team do its thing. Then it’s onto integrations, launch campaigns and, most importantly, getting the new content to your people. And the journey is just beginning, as you start thinking about ongoing optimisation and curation.

All this sounds like a lot, but it doesn’t have to be. A run-through of the steps described above can be carried out relatively quickly and straightforwardly, and even just following a few of them will add rigour and clarity and steer you clear of some of the pitfalls that many others have fallen into. We certainly hope it leads you to a better outcome. And that outcome might even be to not buy any content at all! Either way, if you need a steady hand to help guide you through, you know where to find us.

Helen Smyth is a senior L&D professional with over 30 years’ multi-sector, global experience. Her current passions are performance improvement, Agile ways of working and taking a product management approach to learning. She has bought and helped to create a mountain of content to support learning over the years and is currently pondering how much of a good thing that is! The views shared in this article are her own and not a representation of those inside any organisation she is/has worked for.

Thanks also to Francesco Mantovani for advice on this article.

Credits for 3d models: Noah, helijah, osmosikum, mudkipz321. All 3d models downloaded from Sketchfab.com and licensed under CC attribution. Links to all models used (follow hyperlinks for credit info for each individual model): ASK 21 Mi,Junkers G 38,de Havilland DH.89 Dragon Rapide,Plane,Caravan