Redundancy in language I don't know if you tried to read the image at the top of this article - if not, give it a go now.

It's easier than you might expect it to be, given that the letters of each word have been scrambled. It's possible because there is a lot of redundancy in our language. There's only one English word you can turn 'OTO' into. Only two English language anagrams of 'UMCH'. Change a few letters in a sentence and you are unlikely to make another sentence that means something different. Even with a few errors, you can recover the meaning.

This redundancy in language is useful for communication, in the same way that having two kidneys is useful for survival. Miscommunication is less likely: a lot has to go wrong before the meaning is lost, whether the language is written or spoken. But it's also inefficient. In circumstances where the context is clear, the redundancy can be stripped away. Airports can be designated LDN, CDG, JFK. Countries uk, fr, de, in. When you already know what I'm talking about, what I might say, redundancy starts to become inefficient.

Computers and language

Until relatively recently, computers and software have worked in highly specific, context-rich environments. Applications were written to perform narrow tasks in narrow domains. When computers communicated, or we communicated with them, the information had to be highly structured for them to grasp our meaning."Enter the date in the right format." "Select the item from the drop-down." "Press 0 to speak to a human."

Until relatively recently, computers and software have worked in highly specific, context-rich environments. Applications were written to perform narrow tasks in narrow domains. When computers communicated, or we communicated with them, the information had to be highly structured for them to grasp our meaning."Enter the date in the right format." "Select the item from the drop-down." "Press 0 to speak to a human."

But as artificial intelligence starts to tackle much more complex problems, so computers need to cope with much less structured inputs. The built-in redundancy of natural language - human spoken and written language - becomes useful. This is partly for the same reason that redundancy is useful in our communication: where context is unclear or very broad, redundancy can make errors in interpreting meaning less likely. In addition, access to meaning in human speech and writing vastly increases the data software can draw on and interpret.

L&D and NLP

Natural language processing (NLP) is the analysis of human language, by computers, to extract meaning. Its applications include the already commonplace: search functionality (within documents or across the internet); spam detection and filtering in your email service; voice recognition by your bank's customer service phone line; grammatical suggestions as you split infinitives in your LinkedIn posts. And there are a host of others just around the corner.

Natural language processing (NLP) is the analysis of human language, by computers, to extract meaning. Its applications include the already commonplace: search functionality (within documents or across the internet); spam detection and filtering in your email service; voice recognition by your bank's customer service phone line; grammatical suggestions as you split infinitives in your LinkedIn posts. And there are a host of others just around the corner.

There are a couple of innovations which we have been working on at Filtered, made possible by NLP, which we think will drive change in learning & development in the next few years.

Algorithmic Content Curation

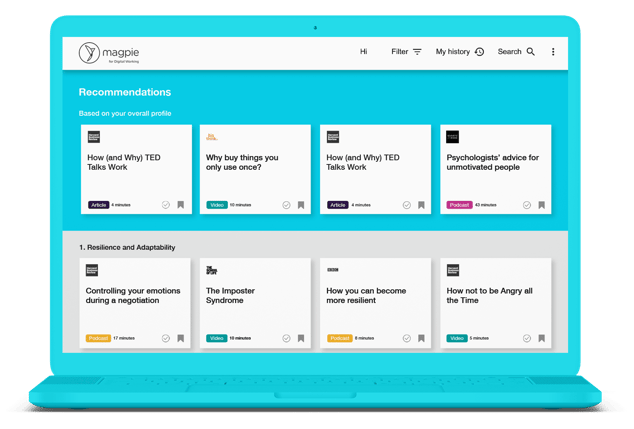

We've developed an algorithmic stack (Magpie) that lets you make sense of and leverage value from any set of learning content.

We've developed an algorithmic stack (Magpie) that lets you make sense of and leverage value from any set of learning content.

Taking raw content as its inputs, Magpie classifies learning assets (courses, articles, videos - anything) in the language of your business or sector, organises them in line with your objectives, and prioritises them for individuals, to maximise impact.

Magpie combines domain knowledge, in the form of concept graphs and domain-agnostic machine learning techniques driven by usage & NLP to incorporate both sector expertise & individual and organisational nuance. By training neural networks to emulate human decision making, the algorithms are bootstrapped with human expertise and can embody local (e.g. organisational) preferences & priorities.

Chatbots

Use of chatbots - intelligent software that can process human language and respond sensibly and usefully to it in real time - is becoming more frequent in customer service and technical support. This opens up possibilities in L&D for performance support, and more immediately for learners to take more natural, nuanced control of their learning. We've incorporated a conversational interface into our magpie learning recommendation engine: give it a go here, let us know what you think, and watch this space as we add to its capabilities in the coming months.