Evaluating and iterating your learning content strategy is a key part of the process as we’ve outlined in our Learning Content Strategy Playbook.

After all, a learning content strategy should be adaptive and agile to address both external and internal changes. In fact, we’ve found that organisations that are at the highest level of the Learning Content Strategy Model evaluate and iterate constantly.

TL;DR

Evaluating and iterating your learning content strategy is crucial to keeping it effective and relevant.

- Revisit goals, and redesign them based on business context.

- Gather data on content starts/completes, email opens/clicks, social shares/comments, and business performance.

- Analyse data to determine which skills/formats/departments performed best.

- Make improvements based on data analysis.

- And repeat - constantly evaluate and iterate to address changes.

Let's dive into each of these points in more detail below. 👇

Revisit your goals

Content strategy goals can be diverse and somewhat abstract, ranging from fostering skill development to cultivating a culture of self-directed learning or supporting talent mobility platforms.

Ideally, your strategy initially established two to five clear goals tied to business objectives, along with measurable metrics for tracking progress.

For example:

The goal was a richer, smoother learning experience to contribute to employee retention and you had some survey and interview measurements to rely on to show a link between any improvement in retention and your strategy.

If this sounds more specific compared to your initial vague goals, don't worry. It's crucial to reassess your goals at the beginning of each evaluation and iteration process. Now is the time to acknowledge that these goals were too vague to measure progress and revisit them.

Vague or precise, you first need to revisit those goals.

- Are they still appropriate to the business context?

- Did the goals change mid-period (this isn’t necessarily a bad thing?)

- Do you need to retrospectively change any aspect of the goals?

- Will your data and measurements be sufficient?

The world and business changes quickly. It’s probably not a good sign if you need to ditch your initial goals completely during every review cycle, but it’s absolutely right that you redesign goals based on what actually happened and measure appropriately.

A good model to think about for changing the specifics of a goal but keeping the overall aspiration is the Objectives and Key Results (OKRs) system developed at Intel and used to power Google’s success.

Qualitative objectives (‘a richer, smoother learning experience’) should be agreed at a senior level and shouldn’t change within the goal cycle; Key Results (‘five point increase in user satisfaction rating’, ‘reduce content/format overlap to no more than 20%’) can be modified if they no longer fit the business context.

We recommend a 90-minute session to prioritise needs at the beginning of your strategy to prioritise the right focuses for your team.

Collect data

At a minimum, the data to gather should include:

- Content starts and completes

- Email opens and clicks

- Social shares and comments

- Business performance data for the audience you reached

Ideally, this data should exist ahead of implementing the learning content strategy. If it doesn’t, there should be an agreed-upon process to gather the data before and after in the same way to be able to measure the change. Be sure to have a mix of both quantitative and qualitative data to have a holistic view.

Collect any other relevant information that makes sense for your goals, such as qualitative feedback from key stakeholders.

Analyse your data

It can be easy to be overwhelmed by all of the data, so it’s important to ask the right questions, such as:

- Which skills resonated with users and which didn't?

- Which formats?

- What departments engaged the most over a period?

- How did performance differ between departments in that time?

- Can you isolate the impact of content on individuals and teams?

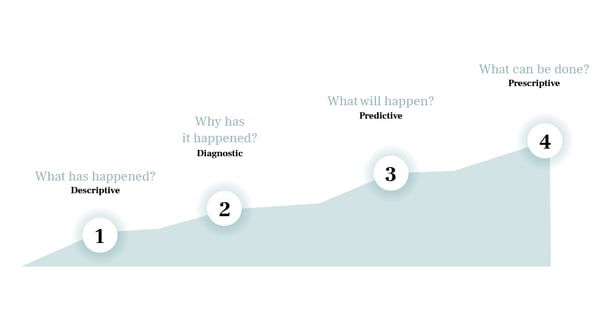

Based on your needs, consider the 4 types of analytics.

- Descriptive: What has happened

- Diagnostic: Why has it happened?

- Predictive: What will happen?

- Prescriptive: What can be done?

Since you’ll be looking back on your learning content strategy’s performance, it’s most likely that you will be leaning heavily on descriptive and diagnostic analytics.

Source: Learning Analytics – the complete guide by HCM Deck

Success won’t just look different from organisation to organisation, but also at every level. Be sure that you’ve established with stakeholders on what your success metrics look like:

- Individual outcomes: skill certifications, career growth

- Business level metrics: retention, performance, growth

Make improvements

If the issues that you have been trying to address are still recurring, try opportunity mapping to get to the root of the problem and improve your outcomes in the next iteration of your strategy.

At the risk of sounding like a broken record, the strength of your learning content strategy comes down to how well it is delivering outcomes aligned with business objectives. Ensure that any improvements you make are for this purpose, and this purpose alone.

And repeat

As you can see in our diagram, the Learning Content Strategy Playbook is intended to be cyclical. In other words, it’s a flywheel where you’ll see improvement with every iteration.

Schedule regular intervals where you’ll evaluate and iterate your content strategy. We recommend either quarterly or every six months - any sooner than that and you risk not collecting enough data to draw conclusions from.