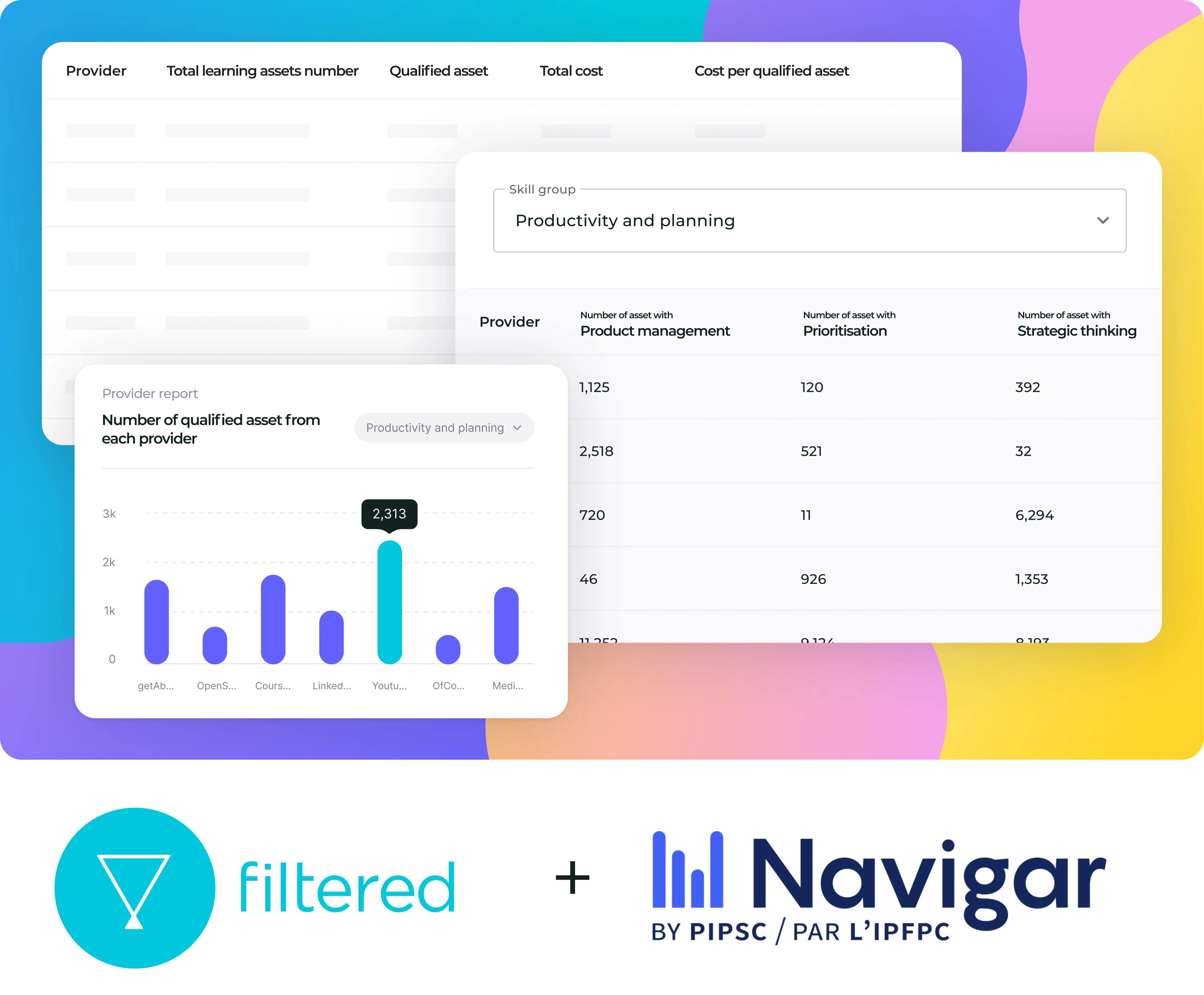

FILTERED X PIPSC case study

Discover how Filtered helped PIPSC curate pathways 2X as fast to a much higher level of diversity and quality

Request a demo

What difference does Filtered make to curation speed and quality?

"We're building a skills development platform where our members can go to figure out what their skills are, understand their skill gaps and access recommendations and training pathways to help them upskill or reskill. We rely on Filtered heavily from the curation perspective. I work with Filtered every other week: we have conversations about the best way to streamline our curation process.

Salah Bazina, Research Officer (Content Development Lead), PIPSC

How much better is Filtered’s curation tech than existing platforms and tools?

One very direct way to find out is to measure the difference scientifically, in a controlled trial.

By controlled, we mean that we control for other variables. Everything except the difference Filtered makes is the same:

✅ It’s the same content library

✅ The same mix of curation topics and pathway briefing

✅ The person curating is the same

✅ And the person reviewing output is the same

This is exactly what we did with PIPSC. PIPSC stands for the Professional Institute of the Public Services of Canada, a union which has 70,000+ members and represents scientists and professionals employed by the government at all levels in Canada.

PIPSC is helping its members navigate the changing world of work with the Navigar project: the goal is to develop a user-friendly platform where members are informed about the changes impacting their jobs, access learning opportunities that would help them thrive in the context of those changes, and support them in seeking their employer’s approval in funding their educational goals.

We're working right now with a vendor which has 40,000 courses and training materials so we do rely on Filtered because we need to filter it down. Filtered is super important for us.”

Salah Bazina, Sr. Researcher (Content Development Lead), PIPSC

The project combines a custom built front end, content from a leading digital learning provider - and Filtered’s technology. It requires hundreds and hundreds of role and topic specific learning pathways to be curated to shape the learning platform and guide users. In turn, that requirement gave us the opportunity to test Filtered’s curation interface in a like-for-like comparison with the platform provided by the content provider.

The result? Pathways in Filtered were curated twice as fast (7 mins vs 14 minutes) to a much higher level of diversity and quality. Compared to curation tools native within the same system!

It’s important to note that this was a comparison of Filtered using only one content provider with its own native search tool. For most organisations, there are at least 2-3 libraries to curate from, and this complexity amplifies the time saving for the curator to 5x or even 10x.

We rely on Filtered heavily from the curation perspective... we have conversations about the best way to streamline our curation process.

Salah Bazina, Sr. Researcher (Content Development Lead), PIPSC

David Manocchio, the curator who ran the experiment, said that it made curating for technical skills much easier as he could use Filtered’s relevance scores to give a clear indication of suitability without needing validation from an SME. He was able to effectively use the filters to reduce the volume of assets to review / choose from - efficiency + speed gains. According to David, in comparison to the content provider’s tools, Filtered is:

Conclusive: The relevancy score factor ensures that the most suitable assets are available and presented in descending order. This implies that any relevant or notable courses will not be missed or overlooked. And efficient: Finding assets, creating playlists, and downloading the relevant data is a quick, user-friendly process.

🔬 How we did it - in detail:

Want to reproduce a controlled trial like this for curation - or for any other tech? Here’s our exact method!

- We will measure the quality of 20 [10 unique playlists x 2 methods] curated playlists of content, and the time it takes to produce them.

- We will make this measurement both (A) using Content Intelligence and (B) using a control process/toolkit. The control will consist of the content-providers’ native search and curation functionality.

- Curator(s) will produce the playlists in blocks:

- 10 playlists produced according to process A;

- 10 playlists produced according to process B;

- In order to control for ordering effects, we will switch the order (B → A) between curators.

- Curators will track the time taken to complete the task.

- We will measure the quality of the resultant playlists, through independent review of the results, according to a structured marking scheme:

- Overall relevance of the playlist;

- content diversity;

- sequencing & progression;

- Attractiveness.

- The playlist origin (process A or B) will be hidden from the reviewers.